Youtube's Recommendation Fiasco 📺

GPT2 goes viral. The sun's secret heartbeat. Blockchain survey receives mixed results.

Do you enjoy this newsletter? Consider forwarding it to a friend or colleague.

What do your recommended videos say about you?

YouTube is the second most visited website on the planet (behind Google). Its free video streaming service drives engagement through a feature which recommends similar videos based on your viewing history. Their business model revolves around individuals spending as much time as possible on the site in order to increase impressions that drive advertising revenue.

Today I want to discuss recommendation systems, how they work, and why the story above represents a larger trend that shapes our digital interactions.

The recommendation bar

These days, I mostly use YouTube for streaming music while I work. Each time I visit the site I am presented with a curated list of videos that are personalized based on everything YouTube knows about me. Since my Google account is linked, this includes all of my search history and internet preferences in addition to my Youtube viewing history. When a video ends, the next selection is chosen from the recommendation bar and will automatically begin if I don’t disable the Autoplay option. Remember, the metric that is being optimized is minutes of content watched.

Recommendation systems are not a novel concept.

When you sign up for Netflix, you are asked to rank which movies you enjoy; this information helps to create a personalized experience for the next time that you log in.

Your “Recommended Friend” feed on Facebook is based on your complex social graph and as well as friend requests you send or receive.

Spotify generates custom playlists that uses your play count and likes to match your musical tastes and preferences.

The concept of personalization has seeped into our digital experiences so deeply that we barely notice it anymore. So, what’s actually happening?

How recommendations are generated

Recommendation systems represent one of the most successful and widely implemented applications of machine learning. At their core, these systems attempt to predict your reaction to a new topic or input.

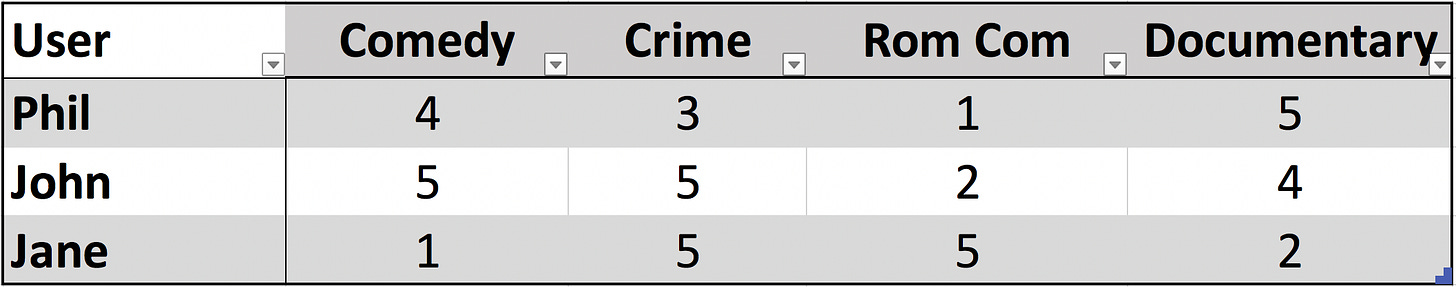

The table below is a basic representation of how a recommendation system like Netflix captures information. The rows represent users and the columns are genres of movies in which they might be interested. The numbers represent a calculated figure based on metrics such as view count, hours watched, likes, subscriptions. Higher numbers mean the user prefers that category:

Phil is a documentary and comedy buff, but not big on rom-coms.

John shares similar tastes with Phil, and his frequent rewatching of Michelle Pfeiffer films bumps the crime category up to a 5.

Jane thinks standup routines are crass, but is a sucker for Vince Vaughn in a tropical setting.

So how is this useful?

Imagine there is a 5th category added to the list called “Drama.” John logs in and sees that Murder on the Orient Express has been added to the catalog. He remembers Ms. Pfeiffer has a leading role, so he spends his afternoon watching it.

The next time Phil logs on, he may see a recommendation for Murder on the Orient Express. This is due to a collaborative filtering approach that measures the similarity between users. Phil and John share interests, so it’s reasonable to assume that this new category will be scored similarly.

This type of suggestion makes the experience feel personalized and improves the chance that I stick around for another movie viewing. It also drives engagement and will discount or downplay options that don’t increase screen time. The system isn’t “smart” — it is a large scale pattern matcher that continuously optimizes for engagement.

Focusing on engagement is good for the wallet, but bad for the soul. Videos that tend to cause binge-watching are often low effort or inflammatory; climate change deniers, extremism, and conspiracy theorists have been shown to appear in alarmingly higher than average amounts in the sidebar.

Why? Because views == $$$, baby.

What’s next?

There is a dangerous trend converging: as these systems continue to improve, our time spent on the applications increase. More time on the applications means more information to drive the recommendations, and improved ability to keep users engaged. Algorithmic feeds and infinite scrolling are the UI patterns that make applications like Instagram, Twitter, and Facebook so addicting. Combined with a deep understanding of how to keep us on the site for just one more click, and it becomes a self-perpetuating cycle.

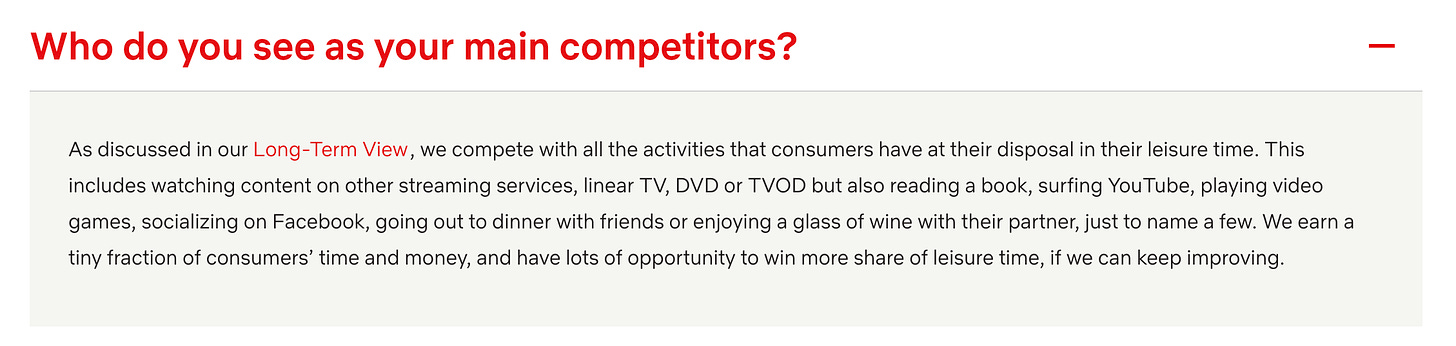

On Netflix’s FAQ page, there is an unveiled reference to this behavior:

I’ll go ahead and emphasize the point:

We compete with all the activities that consumers have at their disposal in their leisure time […] going out to dinner with friends or enjoying a glass of with their partner […]

Netflix isn’t trying to show you better movies. They are trying to make sure you spend every free moment you have on their website. YouTube’s curation of an illegal child pornography collection resulted from an oversight that values engagement above all else. This week, their team released a statement announcing that they would be “reducing borderline content and raising up authoritative voices.” This includes:

[…] videos that promote or glorify Nazi ideology, which is inherently discriminatory. Finally, we will remove content denying that well-documented violent events, like the Holocaust or the shooting at Sandy Hook Elementary, took place.

The expectation has been set that private companies will curate the content that we see online. A host of problems emerge when we consider the implications of a politically mono-cultured corporation setting the bar for what is acceptable. The centralization of internet authority has setup technology incumbents to act as the arbitrators of truth. Facebook’s internal solutions are inconsistent and ineffectual for the scale of the problem. Internal AI teams have admitted that combating abusive content and hate speech on a global arena is too big for a centralized party to handle.

Next time you fire up your default social media app, stop for a moment and reflect on what is being displayed. When you receive recommendations for content that seem to reinforce your beliefs, consider that you are not being shown the objective truth, but instead a version of the world that will make you happy.

Fake News

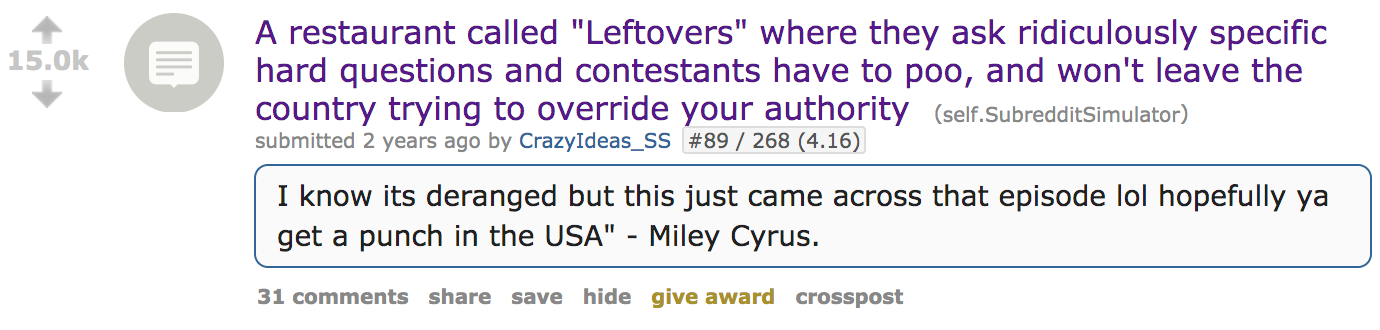

You may be familiar with Reddit’s Subreddit Simulator; a community where content is generated by a type of algorithm known as a Markov Chain. Markov chains attempt to predict the next word in a sentence based on a known corpus of text. An example:

The text of this title came from a subreddit called “Crazy Ideas” where people post catchy, one-liner titles with absurd business ideas. Based on this input, the Markov chain came up with a title that managed to garner 15k upvotes. Upvotes are how Reddit users propel content to the “Front Page” of the website, where they are likely to gain more attention.

A new subreddit called “Subreddit Simulator GPT2” has recently gained popularity due to the quality of the posts. This simulator uses OpenAI’s GPT-2 model to generate the fake posts and comments. The results are extremely realistic, and unlike Markov chains, GPT-2 has the ability to carry context through a story. See this example from the front page:

Not only is the title grammatically correct, but the body of the argument is coherent and several paragraphs long. The sophistication of this model exceeds previous techniques in the generation of fake news.

If you want to play with GPT-2 for yourself, checkout the GPT-2 bot on Twitter, or head over to TalkToTransformer.com

📚 Reading

Book recommendation for the week:

The Dark Forest by Cixin Liu is a fascinating dive into Chinese culture and thinking, wrapped within the plot of a near-future science fiction novel. Liu is the most popular sci-fi writer in China today, and his sequel to the Three Body Problem manages to expand on the interstellar drama that won him the Hugo Award. Liu’s engineering mindset is clearly demonstrated in his works; the plot revolves around an underachieving academic researcher that is called forward to create a plan that will save humanity from an advanced invasion. America is run by lawyers and China is run by computer scientists — this book is a clear demonstration of the importance of having leaders with STEM backgrounds in places of power.

🔗 Links

The SEC charged messaging company Kik with securities fraud for their sale of $100M worth of the digital currency Kin, claiming it was an unregistered securities offering. Kik replied with DefendCrypto, a fund intended to support legal fees and gain some much needed clarity around ICOs (now being referred to by the consulting propaganda machines as “STO” for “Security Token Offering”). The best part? You can donate Kin to the fund, even though Kik is the majority owner with nearly 90% allocated to their private coffers in the presale. Link.

Researchers claim that planetary orbital cycles are the cause of regularly recurring solar activity that has puzzled the scientific community. Similar to tidal forces on Earth caused by the Moon, the planets’ gravitational effects trigger an oscillation that sets off a complex perturbation within the plasma layer of our star. Neat. Link.

Stack Overflow released their yearly developer survey. My favorite excerpt:

Q: Developer Opinions on Blockchain Technology

A:

29.2% Useful across many domains and could change many aspects of our lives

26.2% Useful for immutable record keeping outside of currency

16.8% A passing fad

15.6% An irresponsible use of resources

12.2% Useful for decentralized currency (i.e., Bitcoin)

¯\_(ツ)_/¯ Guess the jury is still out. Link.

A really great firsthand summary on Japanese business culture and practices. Link.

Have an idea for a future topic? Send me an email at newsletter@philmohun.com

Follow me on Twitter and Medium.

Not a subscriber yet?