Who's The Real Expert?

As language models improve, programming will begin to look more like writing.

There is a sort of mania that has swept over Tech Twitter the past few weeks, fueled by a flood of impressive and unnerving demonstrations of GPT-3.

Technology companies do demos all the time, yet few have had the kind of public reaction caused by GPT-3. Part of the reason for the outsized response is because OpenAI created very few of the demos themselves — instead, they’ve released access to their product via a closed API that allowed independent developers to do their marketing for them.

These indie demos are impressive precisely because they are not the flashy promotions of a multi-billion dollar company. Instead, we’ve seen projects from individual creators that mirror the functionality of state-of-the-art products with a few hours of work. What’s even more impressive are the brand new applications that were not possible before.

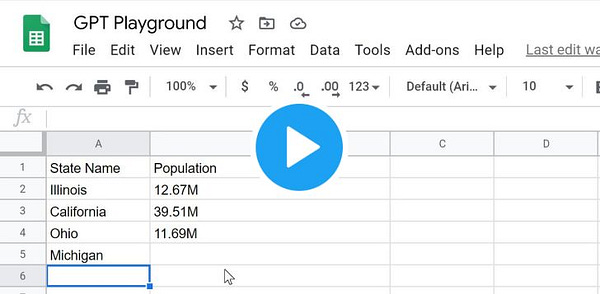

Here is GPT-3 completing a spreadsheet in Excel with only a few examples:

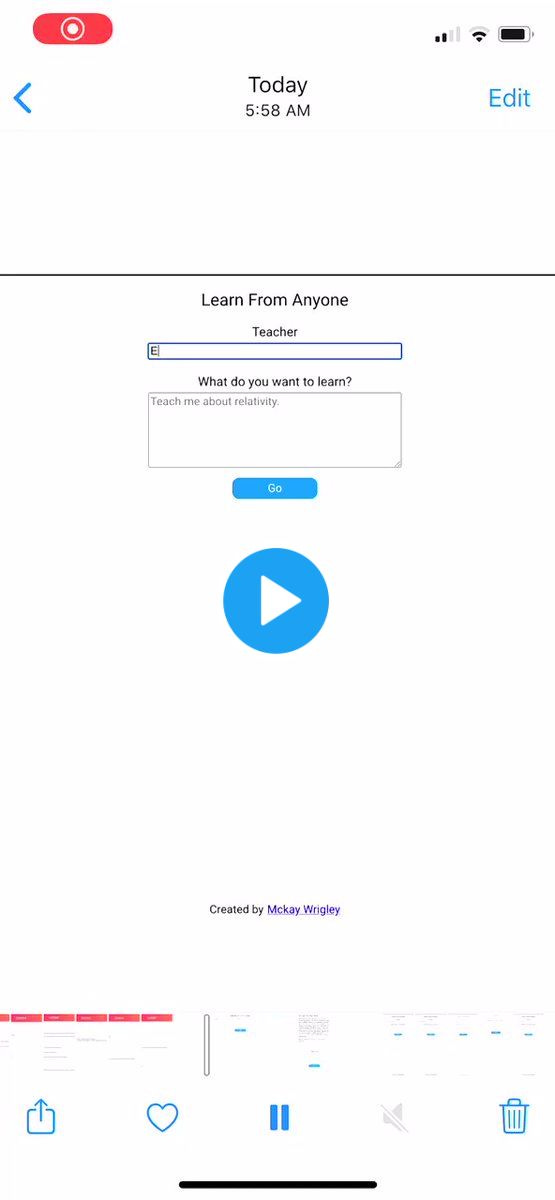

Here is GPT-3 answering questions in the style of famous entrepreneurs and writers:

Here’s a love letter … from a toaster:

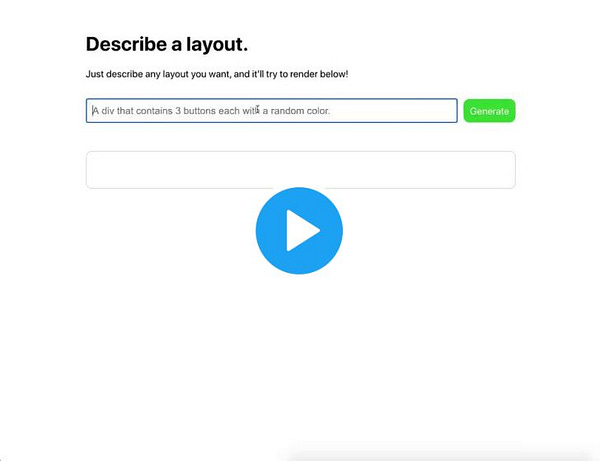

Here is GPT-3 writing code:

The beauty of GPT-3 is the ability to use natural language to express problems that previously required programming ability to solve. This is powerful because it provides a way for the people experiencing problems to solve them by simply expressing their request clearly.

Until now, software development has depended on experts to translate requirements into code. This process is complicated because the people experiencing the problems are usually different from those with the ability to solve them. The eventual end-goal of programming is that the person experiencing the problem does the programming, without an “expert” in the middle.

Since the invention of software, we’ve moved through higher and higher layers of abstraction — from machine code to FORTRAN and eventually high-level programming languages like Python. These languages have served us well, but they tend to be written by experts in computer science rather than experts in communication. Most programmers who write new languages tend to be logical people, so they write logical languages with very little flexibility for error or redundancy. This has resulted in programming languages that look very different from the languages that we use to communicate with one another.

GPT-3 offers the capability to take a natural language input, match it against the context of the sentence, then propose a query that is understandable to the layperson. This abstraction allows us to understand the problem better, rather than just analyzing the way the problem manifests itself in code. Having a programming language that mirrors our everyday communication is an important step forward in making the innovations from software broadly available.

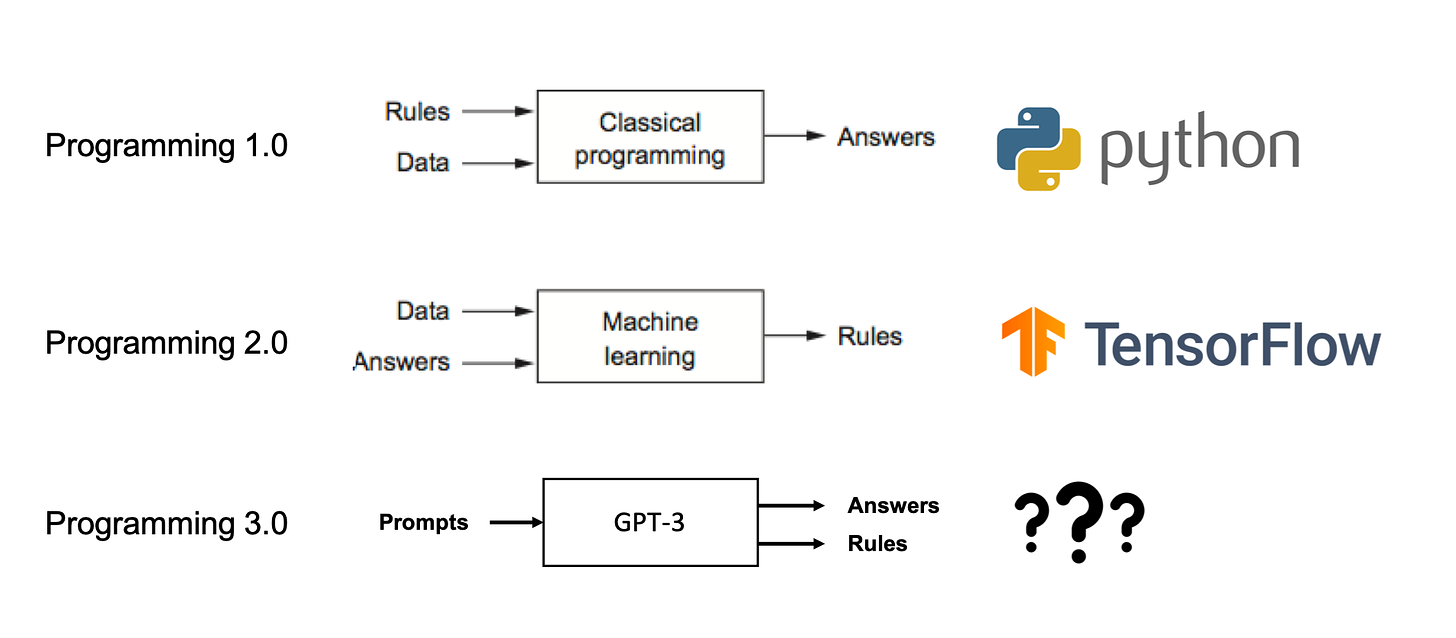

Subscribers of this newsletter are familiar with a concept that I’ve been exploring called Programming 3.0. Programming 3.0 describes the skills needed to interact with these models successfully.

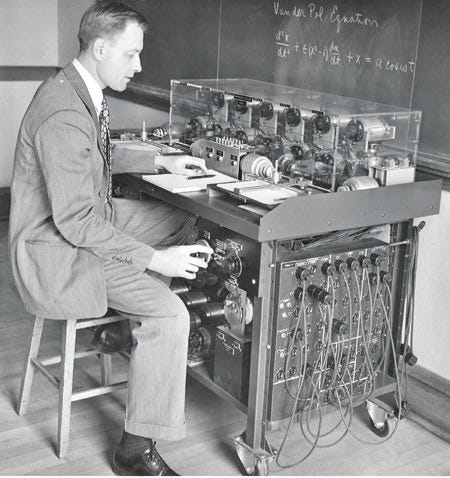

The first generation of programming, programming 1.0, was based on the idea that we can use machines to automate repetitive tasks. The history of modern software can be traced back to the mid-1900s when early computers were used to calculate ballistic missile trajectories for wartime activities. Programming 1.0 is based on the idea of explicit languages and rules that allow developers to crystallize ideas into a structured form.

Source: The differential analyzer, an early computing device created at MIT for computing guided-missile trajectories.

The next generation of programming, programming 2.0, is built on the assumption that programming languages exist. Programming 2.0 uses machine learning to find patterns in data that are otherwise difficult for humans to define manually. This revolution was kicked off with the release of ImageNet and the realization that large labeled datasets can effectively train neural networks.

After using GPT-3, I’m increasingly convinced that we’re seeing the birth of programming 3.0. New paradigms in technology are often built on the assumption that the previous layer in the stack exists. We’ve been in the “machine learning” era since ~2014. While there is still plenty of business opportunity for both programming 1.0 and 2.0, this new model of human-computer interactions feels different.

Programming 3.0 assumes that trained models exist. Instead of requiring humans to write code or design datasets, programmers will become computer psychologists. Their specialty will be in the manipulation of prompts that coax out useful answers from extremely large models trained on huge datasets.

The next wave of programmers will need to understand how human language can be used to efficiently guide models to solve problems that can’t be solved by human-written code. This is a big challenge, and we’re just at the very beginning of it, but I think it will open up new and undiscovered ways to create value in the world.

An essential part of the programming 3.0 model is that it’s still possible to work within the “old” paradigm to get good results. We might not want to model every application in an automated way, but this is a huge breakthrough because it means that we now have a way to “convey” our intentions to the machine without needing to articulate them fully.

This is one reason why I think we’ll still need a mix of programming 1.0, 2.0, and 3.0 — when we communicate with computers in the future, we will use the highest level language that works for our particular situation. Situations that tolerate no ambiguity will require explicit and interpretable explanations (programming 1.0), while other problems that can handle ambiguity will favor a natural language approach (programming 3.0).

The limitations of programming today mean that we approach every problem as though it has an explicit answer. Programming 1.0 gave us a hammer, and we’ve spent the last fifty years pounding everything into a Jira backlog. Without the excuse of the expert in the middle, it will become apparent how poorly defined most of our problems are. Programming 3.0 is limited only by our ability to clearly articulate our issues and chose from the options presented to us.

GPT-3 expands the number of problems that can be expressed in software, but it doesn’t erase them. Until we have a better understanding of human communication, it is unlikely our software problems will vanish.

Sunday Scaries is a newsletter that answers simple questions with surprising answers. The author of this publication is currently living from his car and traveling across the United States. You can subscribe by clicking the link below. 👇

If you enjoyed this issue of Sunday Scaries, please consider sharing it with a friend. The best way to help support this publication is to spread the word.

[1] Hamming talks about the progression of programming languages in ‘The Art of Doing Science and Engineering’.

[2] I wrote some of this using GPT-3. I’ll let you try to puzzle out which sections weren’t written by me.